Projects

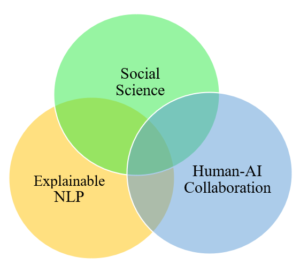

Explainable Natural Language Processing for Social Sciences

Explanatory learning models in Natural Language Processing (NLP) are models that provide explanations for their predictions or decisions. They help humans understand why a particular decision or prediction was made. This is important because it fosters trust in the system. When humans understand the reasoning behind a decision, they are more likely to trust the system and use it effectively. Additionally, explanatory models can aid in identifying biases in the system and improving its overall performance.

In this project, we are trying to present a method to increase interpretability by knowing about different explanatory learning models in the field of NLP and trying to improve the interaction, understanding, and use of it in collaboration with humans.